Advertisement

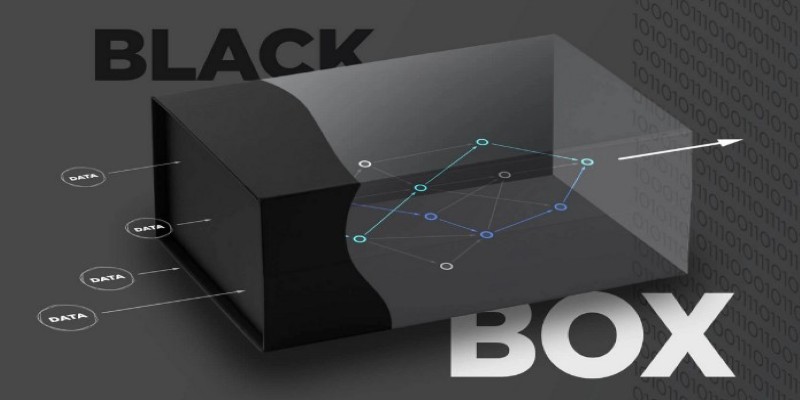

AI black boxes often get brought up in conversations around trust, safety, and transparency. The term itself isn’t about physical machines or any mysterious device—it points to AI models whose inner logic is hidden from view. You give the input, and you get the output. What happens in between? That's the part people call the "black box."

These models can be incredibly accurate, but that doesn’t always mean we understand why they make certain decisions. And that lack of clarity can lead to big concerns, especially when decisions affect real lives, like healthcare, job hiring, or loan approvals. So, how exactly do these black boxes work?

An AI model turns into a black box when it becomes so complex that humans can’t easily trace how each input leads to each output. This is especially true in deep learning, where layers upon layers of artificial neurons work together. These neurons pass data to each other in ways that aren’t directly explainable. You can see what went in, and you can see what came out—but tracing the exact path is nearly impossible without extra tools.

This kind of setup isn’t always intentional. In fact, it's often the result of trying to boost performance. The better a model gets at picking up patterns, the harder it becomes to explain how it found them. So, ironically, the more accurate some AI models become, the less we understand them.

There are several ways that these systems operate under the hood, and understanding them helps explain why transparency becomes a challenge.

Neural networks mimic how brains process information. Each "neuron" is a basic unit that processes information and passes it along. When you stack a few of these layers, you get a standard neural network. But modern AI often uses deep neural networks, which can have dozens or even hundreds of layers. Each layer transforms the data in a slightly different way.

For example, in an image recognition task, early layers might pick up lines and edges, while deeper layers start identifying shapes or even full objects. The issue? These layers build up a chain of processing that’s nearly impossible to unwind. You don’t get a clear logic path like, “If the image has stripes, and it’s orange, it’s a tiger.” You get a long flow of numbers.

One reason for the lack of transparency is how AI decides what's important. It creates features—patterns or traits—from the input data. But in many models, these features are abstract. A model might decide that some blend of pixel arrangements means “cat,” but there’s no human-friendly label for what that feature is. We just know the model thinks it's important.

Unlike rule-based systems, where each condition is written out clearly, these features are buried deep in numerical layers. You can't always tell if a model spotted something useful or something coincidental.

Training an AI model involves a bit of randomness. Most deep learning systems start with random weights, meaning they begin by guessing. Then they gradually adjust those guesses based on how wrong they are. Over time, they get better.

But here’s the twist—if you train the same model twice on the same data, you might get two slightly different versions. Each will make similar predictions, but how they arrived at those predictions might differ. That randomness adds another layer of mystery, making it harder to trace decisions back through the model.

Humans think in three dimensions. AI models don’t have that limit. They often work in spaces with hundreds or thousands of dimensions. This makes it easier for them to pick up subtle patterns, but it makes it much harder to understand them.

Try imagining a shape in 300-dimensional space. You can’t. And neither can anyone else. But that’s where the model lives. It learns rules in that space, not in the space we’re used to, which makes it difficult to explain its behavior in everyday terms.

Inside each layer of a neural network are functions that decide what to do with the incoming information. Many of these functions are nonlinear, meaning they twist the data in ways that aren’t straightforward. These twists help the model handle complicated tasks, but they also make the flow of information more obscure.

It’s not just A plus B equals C. It might be a squashed, curved version of that relationship, and it changes slightly every time new data comes in during training. This nonlinearity builds strength, but hides logic.

Sometimes, AI systems combine multiple models into one, which is known as an ensemble. This could be a bunch of decision trees or a mix of different deep learning models. Each model votes or weighs in, and then a final decision is made.

While this often improves accuracy, it removes transparency. Even if you could explain one model, explaining the combination is far harder. Each part might make sense on its own, but together, they create a tangled web.

In systems like game-playing bots or self-driving cars, reinforcement learning comes into play. The AI isn’t trained on static data—it learns by acting and receiving feedback. It’s rewarded for making good choices and penalized for bad ones. Over time, it figures out how to behave.

But in these systems, the "why" is often buried in trial-and-error history. The model didn’t learn a rule; it just found something that worked over time. That history may span millions of actions, and retracing it is nearly impossible.

AI black boxes aren’t black because someone wants to hide something—they’re black because they work in ways we don’t fully grasp yet. From deep layers and abstract features to randomness and nonlinear math, these systems have outpaced our ability to trace them step by step. They can be smart and useful, but understanding them takes more than looking at the code. It takes new tools, fresh thinking, and a willingness to ask the hard questions.

Advertisement

In daily life, inductive arguments transform ideas, guide behavior, and enable intelligent choices grounded on noticed trends

Looking for the best AI grammar checkers? This guide lists the top tools to improve clarity, fix errors, and make your writing sharper with ease

Looking to process massive datasets efficiently? Discover how AWS EMR offers scalable, flexible solutions for big data processing with minimal setup and cost

Learn how artificial intelligence organizes random data, increases output, and drives efficiency throughout content management

Looking for the best Synthesia AI alternatives in 2025? Discover smart AI video tools that help you create videos with avatars, text-to-speech, and script automation faster and easier

Want to chat, learn, or translate using ChatGPT in another language? Discover how it handles multiple languages and helps with grammar, vocabulary, and fluency

Noom CEO details how AI-driven health technology delivers tailored health support, boosting engagement and improving outcomes

Discover a game-changer in AI tools: Autonomous AI service bots. resolve tickets, predict needs and personalize support.

How to generate and edit DALL·E 3 images step by step using the Copilot Image Creator. Discover how to turn text prompts into stunning visuals and make quick edits—all without design skills

Know what an AI accelerator is, how it works, and why it's vital for powering today's smart tech and intelligent systems

DataRobot's Feature Discovery runs inside Snowflake, offering more secure and scalable machine learning feature engineering

Dive into the strengths and weaknesses of open-source AI models and what they mean for innovation.