Advertisement

Python is simple, clean, and a favorite for beginners and experts alike. However, under the hood, some quirks can affect how Python behaves in real-world projects. One of those quirks is something called the Python Global Interpreter Lock (GIL). It's a mechanism that has sparked debates in the developer community for years. While it's not something you see in everyday code, it plays a major role in how Python handles threads and concurrency. If you've ever worked with Python multithreading and felt that it didn't give the speed boost you expected, the GIL is likely the reason.

This article breaks down what the GIL is, how it operates, and what it means for performance. We'll keep things grounded, skip the jargon, and focus on clarity.

The Python Global Interpreter Lock, or simply the GIL, is a mutex. A mutex is a lock that ensures that only one thread can use a given bit of code at one time. For Python, the GIL guarantees that only one thread can run Python bytecode at a time, even when using multi-core processors.

This means that even if your program creates several threads using Python's threading module, only one thread runs Python code at any moment. The rest wait for their turn. This lock applies to the CPython implementation (which is the most widely used version of Python), not to Python as a language concept. So if you’re using CPython, you’re using the GIL by default.

Why does this exist? Mainly to protect Python's memory management. Python employs reference counting to monitor memory usage. The GIL stops two threads from attempting to modify reference counts simultaneously, which would result in crashes or bugs.

To understand how the GIL works, it helps to look at how Python threads operate.

In Python, when you start a new thread with the threading module, it doesn’t run in full parallel with other threads like in some other languages. Instead, Python's interpreter gives control to one thread at a time. The GIL is the gatekeeper that switches control from one thread to another.

This switch happens every few milliseconds. CPython uses a technique called preemptive multitasking. It decides when to pause a thread and let another one take over. While one thread holds the GIL, others are blocked from running Python code, even if they’re ready.

Here’s what typically happens:

This back-and-forth happens rapidly. But since only one thread is active at a time, you don’t get true multi-core performance from Python threads if they’re running CPU-bound code.

However, if the threads are doing I/O tasks—like reading from disk, making network requests, or waiting for user input—then the GIL steps aside. During these I/O waits, other threads can grab the GIL and run their code.

The Python Global Interpreter Lock directly affects how well Python can perform in threaded environments, especially for programs that are CPU-bound. This becomes most noticeable when using Python multithreading on modern multi-core processors. Even if your system has multiple cores, Python threads can’t run truly in parallel when executing bytecode, because only one thread holds the GIL at a time.

CPU-bound tasks highlight this limitation. When your code involves calculations, simulations, or data-heavy operations using threads, the GIL restricts execution to one thread at a time. More threads don’t mean more speed—they can actually slow things down because of the overhead of context switching.

On the other hand, I/O-bound tasks benefit from Python multithreading. If your program is frequently reading files, making network calls, or waiting for input, the GIL steps aside while the thread is blocked. This gives other threads a chance to run, which improves efficiency.

For true parallelism in CPU-heavy code, Python developers often bypass the GIL in three ways:

Multiprocessing: Separate processes with independent memory and GILs allow real parallel execution.

Native extensions: Tools like NumPy or Cython can release the GIL during computation.

Alternative interpreters: Jython and IronPython avoid the GIL entirely, while PyPy experiments with workarounds, though they often lack full support for C extensions.

Understanding when the GIL is a bottleneck helps developers choose better strategies based on their application's workload.

The Python Global Interpreter Lock gets a lot of criticism, but it’s not all bad. It has trade-offs, and understanding both sides helps in writing better Python code.

Simplicity in memory management: Python’s memory model is built around reference counting. The GIL avoids complex locking mechanisms, which helps avoid race conditions in many cases.

Thread safety for C extensions: Many third-party modules rely on the GIL to avoid writing extra code for thread safety.

Easy to write and debug single-threaded code: For many small to medium projects, the GIL isn’t a problem at all.

Poor CPU-bound performance in threads: Threads don’t run in parallel for CPU tasks. You don’t get the speedup you’d expect on multi-core machines.

Wasted cores: Modern CPUs have multiple cores. The GIL makes it hard for Python to use them fully with threads.

Unpredictable behavior: In some multi-threaded code, the GIL can lead to strange bugs or race conditions if not managed well.

Because of these limitations, Python developers often think carefully before using threads. In some cases, it's better to use processes or turn to different tools when scaling performance.

The Python Global Interpreter Lock limits true multi-threaded performance in CPU-heavy tasks but keeps memory management simple and stable. While it slows down parallel execution, it's manageable with the right tools like multiprocessing or C extensions. Knowing how the GIL works helps you write smarter code and avoid surprises when scaling Python programs across multiple cores.

Advertisement

How to generate and edit DALL·E 3 images step by step using the Copilot Image Creator. Discover how to turn text prompts into stunning visuals and make quick edits—all without design skills

Learn how to access GPT-4 on ChatGPT. Follow these steps to unlock AI features and boost your productivity.

How can AI help storytellers in filmmaking? Discover how LTX Studio uses AI to generate scenes from written descriptions, making the video creation process faster and more flexible for creators

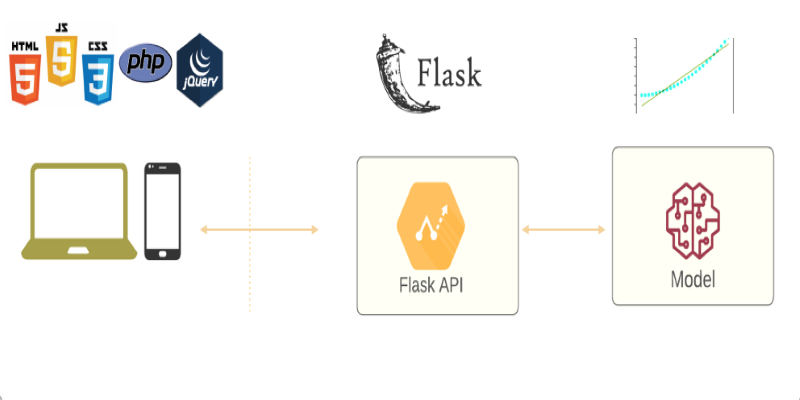

Learn how to deploy your machine learning model with Flask in 2025. Step-by-step guide on setting up Flask, testing your API, and making your model production-ready

Learn how artificial intelligence organizes random data, increases output, and drives efficiency throughout content management

From sorting files to predicting storage needs, see how AI helps manage and optimize your data storage without any effort.

How the Python Global Interpreter Lock (GIL) works, how it affects threading and performance, and when Python multithreading is useful or limited

Discover UiPath's latest update with smarter AI bots, easier bot design, improved integration, and powerful automation tools

Adobe plans to integrate generative AI tools from OpenAI and others to boost creative workflows and user productivity

Explore 10 emerging trends in beauty tech transforming how cosmetics are created, marketed, and applied.

Know what an AI accelerator is, how it works, and why it's vital for powering today's smart tech and intelligent systems

Explore machine learning use cases and business benefits from marketing to cybersecurity.